GenAI in 2025/26 - What's Beyond the Honeymoon Phase?

With another GPT release, it's time to revisit my talk for Macmillan's Global Teachers' Festival, which tried to take a balanced look at proper GenAI use. Let's also predict future trends!

Everybody’s talking about the new OpenAI release, GPT-5. Yet another one!

First, I always read what the much quicker and keener testers have got to say (who also tend to have access to the paid versions, too). I follow Darren Coxon, Amanda Bickerstaff from AI in Education, Leon Furze, and Philippa Hardmann. After all, I can’t test everything all at once and I don’t have the time :)

So, what I can see from these hot takes so far is that this new release isn’t as impressive as it promises to be. While its main message and promise is “thinking” it doesn’t really seem to be doing what we’d consider thinking. And while the study modes (or Gemini’s Guided Learning mode) promise to study with you, they still study for you.

So I think it’s time to revisit my talk at this year’s Global Teachers' Festival for Macmillan, which takes a balanced look at GenAI tools, and tries to predict what’s going to come after the so-called Honeymoon Phase.

The never-ending hype train

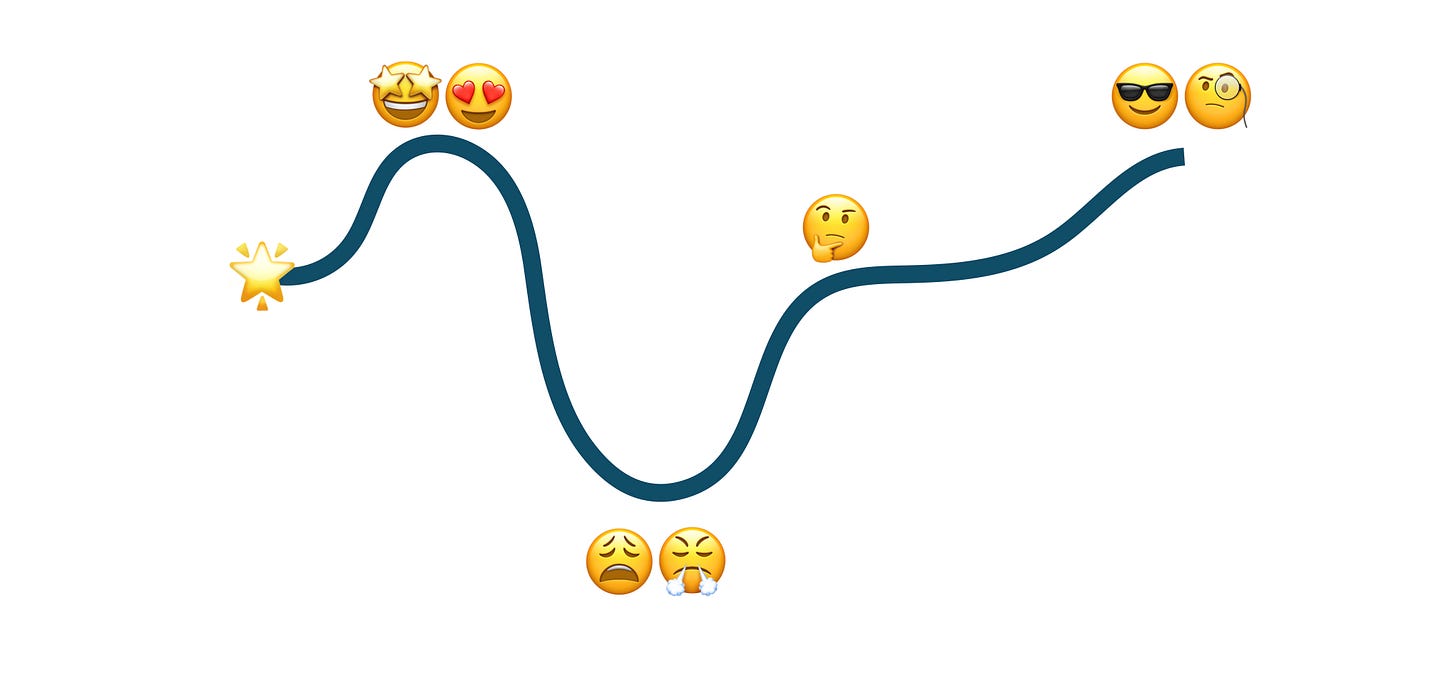

Our relationship with new experiences has been described by several researchers, and they seem to follow a similar pattern. Oberg’s original four stages of culture shock (honeymoon, anxiety / frustration, adjustment, acceptance) or Gartner’s hype cycle (innovation (trigger), peak of inflated expectations, trough of disillusionment, slope of enlightenment, plateau of productivity) are some of the most famous ones out there.

These theories all say that we first get into a kind of trance because we’re so excited about the new thing. But then we slowly come to our senses, start to see the negatives along with the positives, and eventually find our balanced stance.

The problem with GenAI advancements is that this hype train never seems to end. There’s always a new model, a new release, a new feature. And what will that lead to? I’m not sure about others but my best guess is burnout.

At least that’s what’s definitely happening to me. I learned how I can use chatbots in general for my needs, I’m familiar with their main functions, so I find myself more and more difficult to be convinced that this one is THE ONE. The constant addition of new tweaks makes you tired and fatigued after a while, and you just stop caring anymore. I can definitely imagine that most practitioners feel this way.

Getting a bit too comfortable and forgetting about AI ethics

What this sort of leads to is that most teachers get a bit too comfortable with how they use GenAI tools. After all, “If the chatbot can do it, why shouldn’t I do it?” - you might think.

But after almost 3 years’ of chatting away with ChatGPT and co., we should really start meeting the standards of ethical, responsible, and critical use. Let me show you a couple of beliefs teachers might still hold but which should be reconsidered asap.

1. Using a chatbot for every single thing

Yes but we need to pay attention to the huge environmental impact of using GenAI for every single possible thing. Let’s try and think first about what we could do without it and only use it when we really need it.

The other downside of overuse is the slow deterioration of our creative thinking capabilities. If we don’t even try to rack our brains at least for a second, which is a muscle after all, we’re going to find it harder and harder to do some cognitive work.

That’s one of the reasons why ChatGPT now has Study mode and Gemini has Guided Learning. The developers have also noticed (or business analysts told them to pretend that they noticed it) that adding a couple of layers before giving you the answer straight away might lead to better understanding and higher retention.

But then again, we now have a thing called “zero click internet”, which means that you don’t even have to click on a search result to find the answer to your question, Google AI search answers the question right away. That’s not going to help you develop your critical thinking skills, is it?

2. Uploading whatever you can into the chatbot

I couldn’t believe my eyes but I can still find websites that listed prompts that suggest you upload entire books to get summaries, multiple choice questions, study guides and so on.

That’s not a problem per se, Google Notebook LM offers this service in a neat package, too. The problem is if you upload copyrighted material. So make sure you upload open source texts only!

The other thing to pay attention to is data protection. Don’t upload anything that contains sensitive or personal data, such as doctor’s notes, personal photos, contracts, etc.

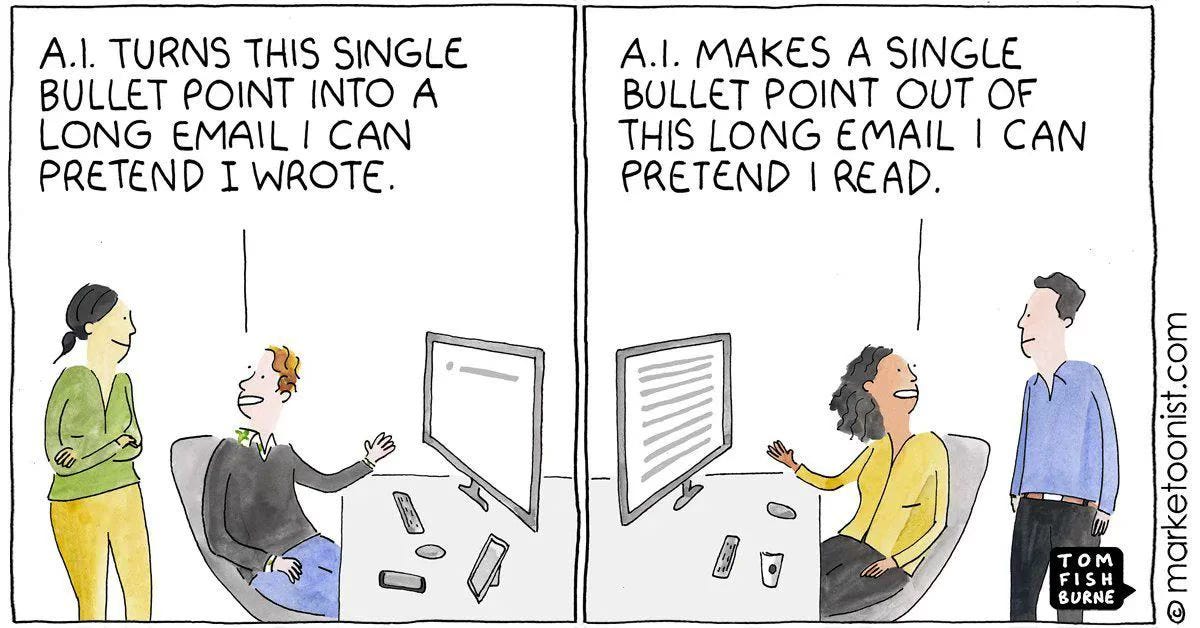

3. Getting two machines have a nice chat

There are so many memes about this! Not without a reason. And that’s actually the part that makes me feel a little happy inside - because I think this could finally shed some light on the hypocrisy of our daily lives.

Most of what we do is just a ritual. And instead of doing what matters, we dance around it in circles, wrapping all of it into nice costumes of irritatingly long and complicated texts. So, what I’d do personally is get rid of whatever seems to be done only to get some boxes ticked (for example, essays without a real and authentic purpose).

What’s going to come in 2026?

Good question, really :D No clear idea yet but these are my guesses:

GenAI tools trying to dominate a big chunk of education - private and state, and trying to shape the way we learn.

Instructional design is also going to make use of AI practitioners in various ways. The question is whether human and AI will be able to work together in innovative ways or whether we’re going to let AI take the lead.

Teachers will slowly lose interest in the new releases and trends, so their AI use might need readjustment and recalibration.

Publishers might start including GenAI features into their ebooks and classroom e-packages to let students and teachers customise the material on the go, right in the classroom.

Connected to this previous point, highly personalised and better quality AI-generated learning content might appear within language learning apps.

There is going to be an even greater need for critical thinking skills, which will hopefully help us weed our way through all the deepfakes and other fake information that already started to proliferate in social media.

And of course something that’s already out there but I still have little clue about how it’s going to crash the gates: agentic AI. An AI agent can basically solve complex tasks autonomously. It can create a series of actions, which it can then carry out, and finally present the end result to you while you have been taking a nap.

What do you think the trends will be in 2026?

You can also watch the recording of my talk here.